Short intro

In bare-metal installations...

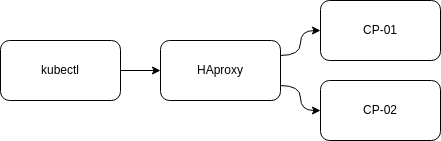

When you create your cluster, you wanted to have two Control Planes so you would have continuity when restarting one of the control planes, or even having one of them off for some period of time would not affect the cluster as a whole.

In theory this is fantastic, but in practice this required a little bit more work than just deploying the second control plane. The focus of this article is to answer an obvious question:

Which address you should use to balance between both control plane nodes?

The cluster looks like:

192.168.68.50 server-cp-01

192.168.68.51 server-cp-02

192.168.68.52 server-node-01

192.168.68.53 server-node-02

192.168.68.54 server-node-03

192.168.68.55 server-node-04What you need is an address that should reach botth CPs in a round robin manner:

Quick and Dirty

Lets take a new address like:

192.168.44.100 cluster-apiPrepare front end access on haproxy

Kubectl will make some checks on the certification of the 6443 port, including the names of the node CP you are aiming to connect, as such you may receive errors about "server-api" is not a name recognized on the certificate, for such problem, you will need to create a new certificate for the haproxy frontend with name(s) corresponding to the new API address you want to use.

First lets create an openssl request conf file to issue a new certificate -- I suggest to create at /etc/kubernetes/pki from server-cp-01 because you will need other files there, the following file:

# cat haproxy-req.conf

[req]

prompt=nodefault_md = sha256

distinguished_name = dn

req_extensions = req_ext

default_bits = 4096

x509_extensions = v3_req

prompt = no

[dn]

CN=cluster-api.domain-name.net

[req_ext]

subjectAltName=@alt_names

[v3_req]

subjectAltName=@alt_names

[alt_names]

DNS.1=cluster-api.domain-name.net

DNS.2=cluster-api

IP.1=192.168.44.100After, you can execute the following commands:

Create a CSR with key for HAproxy frontend section certificate

# openssl req -new -nodes -keyout haproxy-k8s-cluster.key -out haproxy-k8s-cluster.csr -days 3650 -subj "/C=NL/ST=ZH/L=Alphen/O=SpectroNET/OU=K8s/CN=K8S API" -config haproxy-req.conf -extensions v3_reqIt will result in a CSR file (Certificate Signing Request) , this file is used to issue the new certificate in the next command:

# openssl x509 -req -days 3650 -in haproxy-k8s-cluster.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out haproxy-k8s-cluster.crt -extfile haproxy-req.conf -extensions v3_reqIt will now result in the new certificate that will accept the names, IPs we wish to use. You should be able to check the resulting certificate and address with:

# openssl x509 -noout -text -in haproxy-lactea.crt | grep -F1 Alter

X509v3 extensions:

X509v3 Subject Alternative Name:

DNS:cluster-api.domain-name.net, DNS:cluster-api, IP Address:192.168.44.100Combine the newly created certificate with signing CA and key into one file and add on haproxy frontend configuration:

# cat haproxy-k8s-cluster.crt > k8s-cluster-front.pem

# cat ca.crt >> k8s-cluster-front.pem

# cat haproxy-k8s-cluster.key >> k8s-cluster-front.pemAnd at the HAproxy main configuration add:

frontend k8s_cp

bind *:6443 ssl crt /etc/haproxy/certs/k8s-cluster-front.pem alpn h2,http/1.1 npn h2,http/1.1

option httplog

default_backend k8s_cluster_cpCreate a simulated client at the Backend section

When reaching the apiserver , there is a 2way ssl auth connection, you can in a very quick and dirty way, extract the certificates from your client (kubectl) and add on haproxy to pretend its a kubectl query.

Considering that your ~/.kube/config file contains only one access configured, you should have only one "client-certificate-data" and one "client-key-data", as such, you can run the following.

(here though I am extracting that from the /etc/kubernetes/admin.conf file on cp-01)

cat /etc/kubernetes/admin.conf | grep client-certificate-data | awk '{print $2}' | base64 -d > cluster-client.crt

cat /etc/kubernetes/admin.conf | grep client-key-data | awk '{print $2}' | base64 -d >> cluster-client.crtIf you have more than one cluster configured you can copy+paste and run:

echo "large-base64-client-certificate-data-pasted" | base64 -d > cluster-client.crt

echo "large-base64-client-key-data-pasted" | base64 -d >> cluster-client.crtYou will then need to create a complete CA identity for haproxy, as such:

cat /etc/kubernetes/pki/ca.crt > cluster-ca.crt

cat /etc/kubernetes/pki/ca.key >> cluster-ca.crtBring these two files to haproxy certs location and configure the backend with:

backend k8s_lactea_cp

mode http

server k8s-cluster-cp-01 192.168.68.50:6443 ssl verify required ca-file /etc/haproxy/certs/cluster-ca.crt crt /etc/haproxy/certs/cluster-client.crt

server k8s-cluster-cp-02 192.168.68.51:6443 ssl verify required ca-file /etc/haproxy/certs/cluster-ca.crt crt /etc/haproxy/certs/cluster-client.crtReconfigure your access, and possibly the cluster

First thing is your own ~/.kube/config , where you read:

server: https://192.168.68.50:6443Or the DNS name from cp-01 or cp-02, you should now be able to change to our newly create haproxy address: 192.168.44.100 or cluster-api if the DNS name is recognized (as it should):

server: https://cluster-api:6443And you should be able to successfully run:

[mememe@myworkstation ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

server-cp-01 Ready control-plane 3d19h v1.27.3

server-cp-02 Ready control-plane 38h v1.27.3

server-node-01 Ready <none> 3d13h v1.27.3

server-node-02 Ready <none> 3d13h v1.27.3

server-node-03 Ready <none> 3d13h v1.27.3

server-node-04 Ready <none> 3d13h v1.27.3Further you can observe (and possibly change if you wish) in the CMs from kube-system:

kubeadm-config

controlPlaneEndpoint: "cluster-api:6443"kube-proxy

server: https://cluster-api:6443

0 Comments: